regularization machine learning meaning

It is one of the key concepts in Machine learning as it helps choose a simple model rather than a complex one. I have covered the entire concept in two parts.

What Is Machine Learning Regularization For Dummies By Rohit Madan Analytics Vidhya Medium

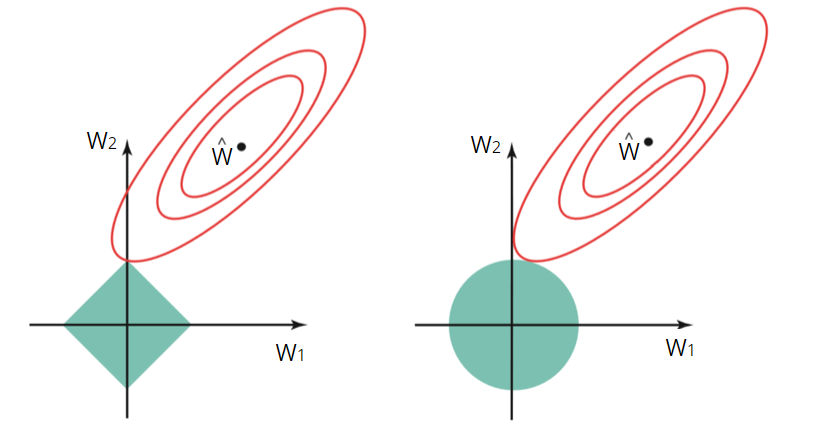

Strong L 2 regularization values tend to drive feature weights closer to 0.

. Mainly there are two types of regularization techniques which are given below. Regularization in Machine Learning. I have learnt regularization from different sources and I feel learning from different.

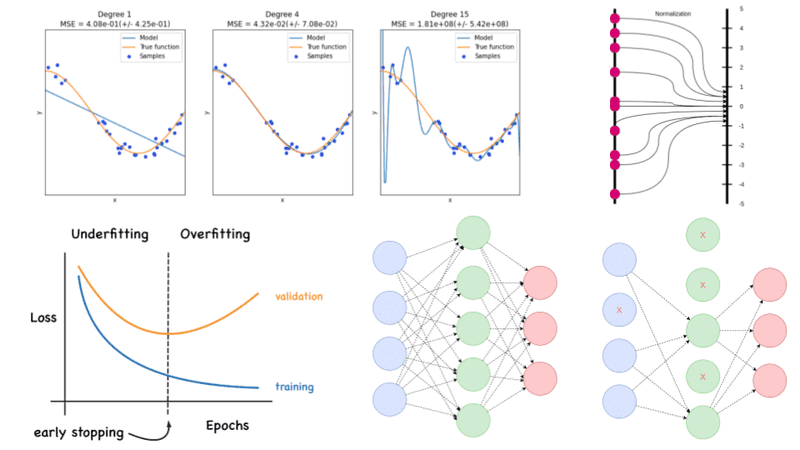

Part 2 will explain the part of what is regularization and some proofs related to it. Welcome to this new post of Machine Learning ExplainedAfter dealing with overfitting today we will study a way to correct overfitting with regularization. Lower learning rates with early stopping often produce the same effect because the steps away from 0 arent as large.

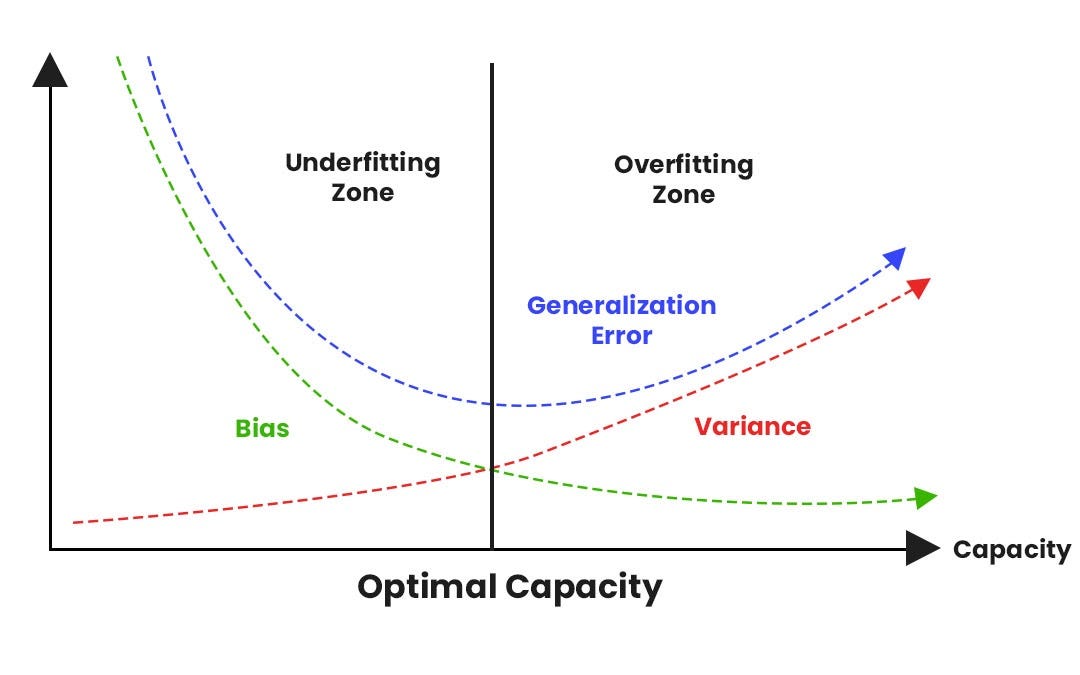

To understand the importance of regularization particularly in the machine learning domain let us consider two extreme cases. Regularization adds a penalty on the different parameters of the model to reduce the freedom of the model. Regularization is an application of Occams Razor.

Hence the model will be less likely to fit the noise of the training data and will improve the. Part 1 deals with the theory regarding why the regularization came into picture and why we need it. This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero.

It is a technique to prevent the model from overfitting by adding extra information to it. Consequently tweaking learning rate and lambda simultaneously may have confounding effects. What is regularization in machine learning.

Setting up a machine-learning model is not just about feeding the data. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. Regularization is essential in machine and deep learning.

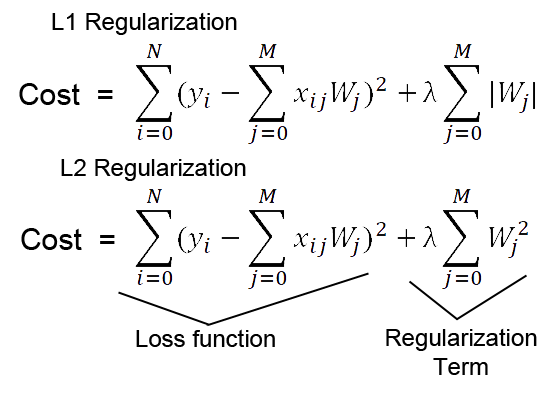

The regularization term or penalty imposes a cost on the optimization. Regularization is one of the most important concepts of machine learning. Generally speaking the goal of a machine learning model is to find.

Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small. It means the model is not able to. Regularization in Machine Learning.

In other terms regularization means the discouragement of learning a more complex or more flexible machine learning model to prevent overfitting. In mathematics statistics finance computer science particularly in machine learning and inverse problems regularization is the process of adding information in order to solve an ill-posed problem or to prevent overfitting. This is where regularization comes into the picture which shrinks or regularizes these learned estimates towards zero by adding a loss function with optimizing parameters to make a model that can predict the accurate value of Y.

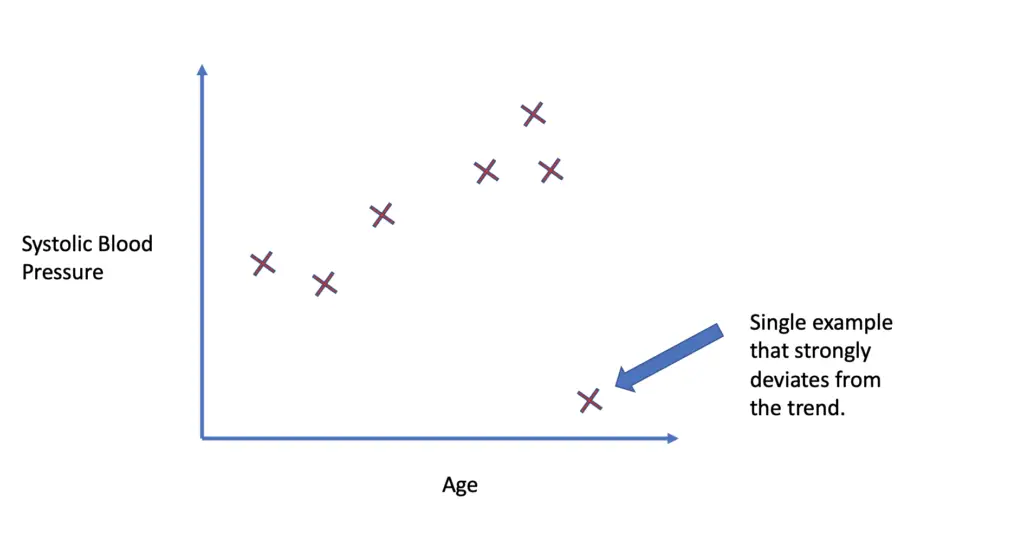

Regularization in Machine Learning is an important concept and it solves the overfitting problem. It is very important to understand regularization to train a good model. Complex models are prone to picking up random noise from training data which might obscure the patterns found in the data.

In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero. In machine learning regularization describes a technique to prevent overfitting. Regularization applies to objective functions in ill-posed optimization problemsOne of the major aspects of training your machine learning model is avoiding overfitting.

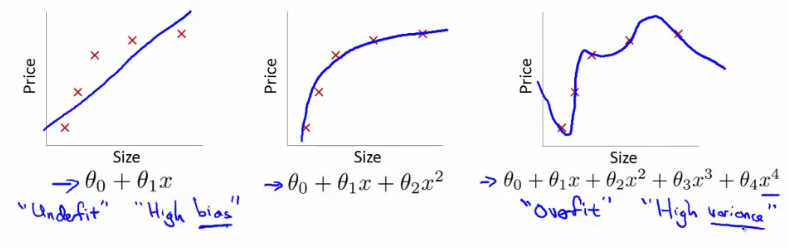

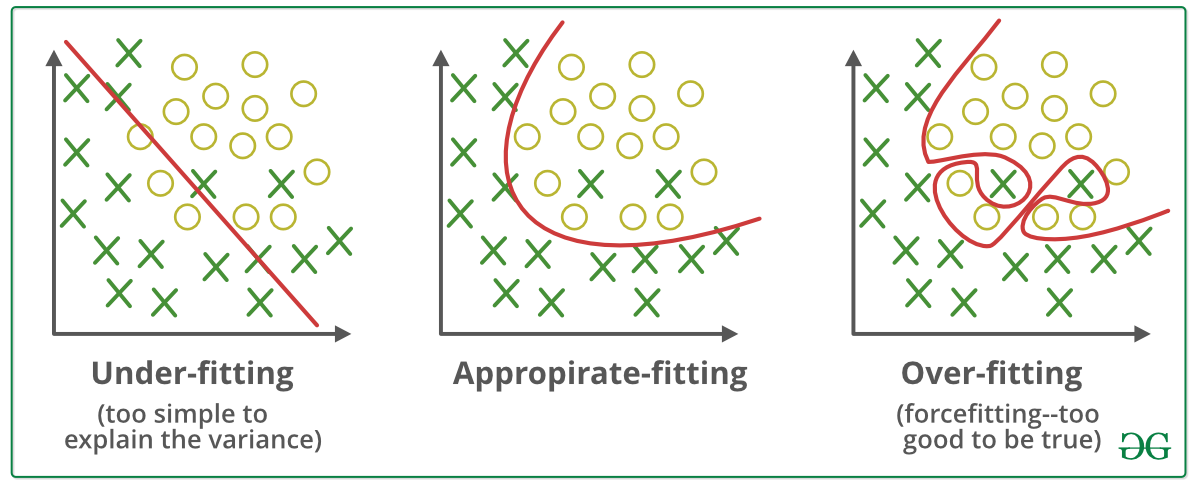

A simple relation for linear regression looks like this. Theres a close connection between learning rate and lambda. An underfit model and an overfit model.

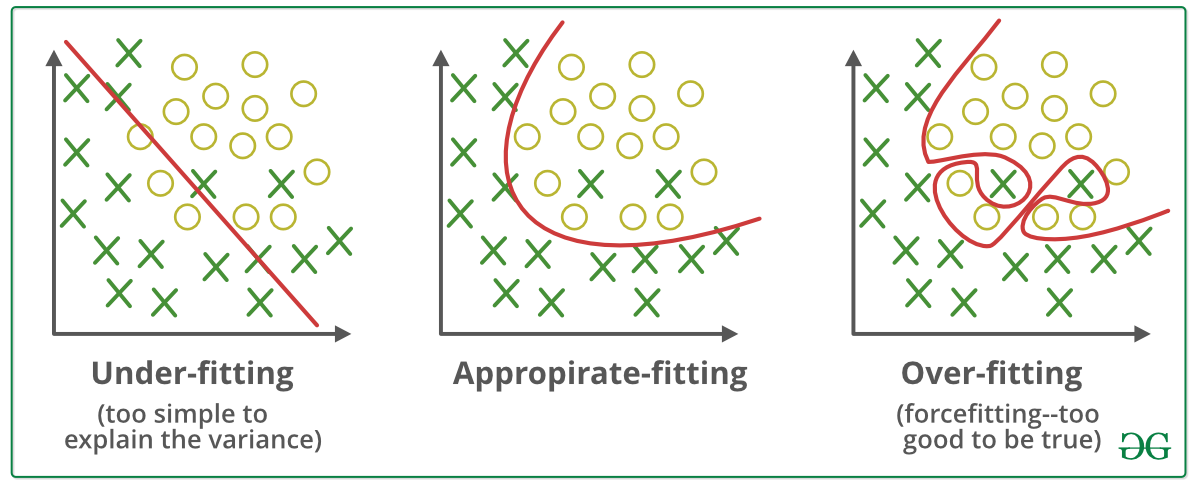

Why Regularization in Machine Learning. Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data. Regularization in Machine Learning What is Regularization.

The major concern while training your neural network or any machine learning model is to avoid overfitting. Regularization is one of the basic and most important concept in the world of Machine Learning. Regularization helps reduce the influence of noise on the models predictive performance.

Therefore regularization in machine learning involves adjusting these coefficients by changing their magnitude and shrinking to enforce generalization. Answer 1 of 37. In machine learning regularization is a procedure that shrinks the co-efficient towards zero.

When you are training your model through machine learning with the help of artificial neural networks you will encounter numerous problems. Regularization reduces the model variance without any substantial increase in bias. It is also considered a process of adding more information to resolve a complex issue and avoid over-fitting.

Regularization is any modification we make to a learning algorithm that is intended to reduce its generalization error but not its training error Lan Goodfellow. Sometimes the machine learning model performs well with the training data. It is possible to avoid overfitting in the existing model by adding a penalizing term in the cost function that gives a higher penalty to the complex curves.

Every machine learning algorithm comes with built-in assumptions about the data. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. Regularization is one of the most important concepts of machine learning.

Regularization can be applied to objective functions in ill-posed optimization problems. In some cases these assumptions are reasonable and ensure good performance but often they can be relaxed to produce a more general learner that might p. Sometimes one resource is not enough to get you a good understanding of a concept.

Sometimes the machine learning model performs well with the training data but does not perform well with the test data. In statistics particularly in machine learning and inverse problems regularization is the process of adding information in order to solve an ill-posed problem or to prevent overfitting. Regularization is a technique which is used to solve the overfitting problem of the machine learning models.

It is not a complicated technique and it simplifies the machine learning process. Overfitting is a phenomenon which occurs when a model learns the detail and noise in the training data to an extent that it negatively impacts the performance of the model on new data. Moving on with this article on Regularization in Machine Learning.

The regularization techniques prevent machine learning algorithms from overfitting. In simple words regularization discourages learning a more complex or flexible model to prevent overfitting. Also it enhances the performance of models for new inputs.

Regularization is the method. It is a technique to prevent the model from overfitting by adding extra information to it. As seen above we want our model to perform well both on the train and the new unseen data meaning the model must have the ability to be generalized.

Regularization An Important Concept In Machine Learning By Megha Mishra Towards Data Science

Regularization Techniques For Training Deep Neural Networks Ai Summer

What Is Regularization In Machine Learning Quora

Regularization Understanding L1 And L2 Regularization For Deep Learning By Ujwal Tewari Analytics Vidhya Medium

Regularization In Machine Learning Programmathically

What Is Lasso Regression Definition Examples And Techniques

Regularization Techniques In Deep Learning Kaggle

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

Machine Learning How Does Regularization Work Data Science Stack Exchange

Regularization In Deep Learning L1 L2 And Dropout Towards Data Science

Regularization In Machine Learning Regularization In Java Edureka

Regularization In Machine Learning Connect The Dots By Vamsi Chekka Towards Data Science

Regularization Techniques In Deep Learning Kaggle

Introduction To Regularization Methods In Deep Learning By John Kaller Unpackai Medium

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

What Is Regularization In Machine Learning Techniques Methods

Regularization In Machine Learning Geeksforgeeks

L1 Vs L2 Regularization The Intuitive Difference By Dhaval Taunk Analytics Vidhya Medium

Intuitive And Visual Explanation On The Differences Between L1 And L2 Regularization